DAGMan Workflows

DAGMan is a HTCondor tool that allows multiple jobs to be organized in workflows, represented as a directed acyclic graph (DAG). A DAGMan workflow automatically submits jobs in a particular order, such that certain jobs need to complete before others start running. This allows the outputs of some jobs to be used as inputs for others, and makes it easy to replicate a workflow multiple times in the future.

Describing Workflows with DAGMan

A DAGMan workflow is described in a DAG input file. The input file specifies the nodes of the DAG as well as the dependencies that order the DAG.

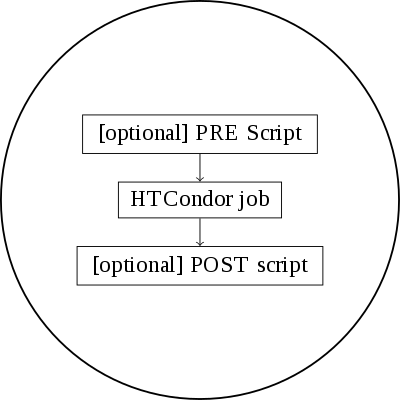

A node within a DAG represents a unit of work. It contains the following:

Job: An HTCondor job, defined in a submit file.

PRE script (optional): A script that runs before the job starts. Typically used to verify that all inputs are valid.

POST script (optional): A script that runs after the job finishes. Typically used to verify outputs and clean up temporary files.

The following diagram illustrates the elements of a node:

One Node within a DAG

An edge in DAGMan describes a dependency between two nodes. DAG edges are directional; each has a parent and a child, where the parent node must finish running before the child starts. Any node can have an unlimited number of parents and children.

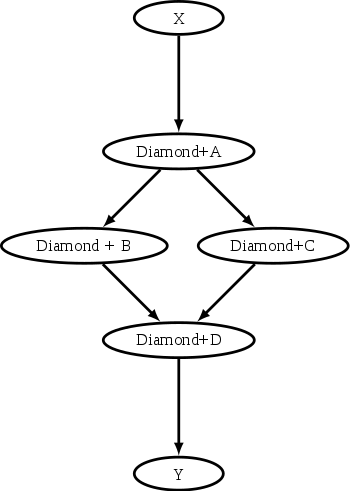

Example: Diamond DAG

A simple diamond-shaped DAG, as shown in the following image is presented as a starting point for examples. This DAG contains 4 nodes.

Diamond DAG

A very simple DAG input file for this diamond-shaped DAG is:

# File name: diamond.dag

JOB A A.condor

JOB B B.condor

JOB C C.condor

JOB D D.condor

PARENT A CHILD B C

PARENT B C CHILD D

A set of basic commands appearing in a DAG input file is described below.

JOB

The JOB command specifies an HTCondor job. The syntax used for each JOB command is:

JOB JobName SubmitDescriptionFileName [DIR directory] [NOOP] [DONE]

A JOB entry maps a JobName to an HTCondor submit description file. The JobName uniquely identifies nodes within the DAG input file and in output messages. Each node name, given by JobName, within the DAG must be unique.

The values defined for JobName and SubmitDescriptionFileName are case sensitive, as file names in a file system are case sensitive. The JobName can be any string that contains no white space, except for the strings PARENT and CHILD (in upper, lower, or mixed case). JobName also cannot contain special characters (‘.’, ‘+’) which are reserved for system use.

The optional DIR keyword specifies a working directory for this node, from which the HTCondor job will be submitted, and from which a PRE and/or POST script will be run. If a relative directory is specified, it is relative to the current working directory as the DAG is submitted. Note that a DAG containing DIR specifications cannot be run in conjunction with the -usedagdir command-line argument to condor_submit_dag.

The optional NOOP keyword identifies that the HTCondor job within the node is not to be submitted to HTCondor. This is useful for debugging a complex DAG structure, by marking jobs as NOOP s to verify that the control flow through the DAG is correct. The NOOP keywords are then removed before submitting the DAG. Any PRE and POST scripts for jobs specified with NOOP are executed; to avoid running the PRE and POST scripts, comment them out. Even though the job specified with NOOP is not submitted, its submit description file must still exist.

The optional DONE keyword identifies a node as being already completed. This is mainly used by Rescue DAGs generated by DAGMan itself, in the event of a failure to complete the workflow. Users should generally not use the DONE keyword. The NOOP keyword is more flexible in avoiding the execution of a job within a node.

DATA

As of version 8.3.5, condor_dagman no longer supports DATA nodes.

PARENT … CHILD …

The PARENT … CHILD … command specifies the dependencies within the DAG. Nodes are parents and/or children within the DAG. A parent node must be completed successfully before any of its children may be started. A child node may only be started once all its parents have successfully completed.

The syntax used for each dependency (PARENT/CHILD) command is

PARENT ParentJobName [ParentJobName2 ... ] CHILD ChildJobName [ChildJobName2 ... ]

The PARENT keyword is followed by one or more ParentJobName*s. The *CHILD keyword is followed by one or more ChildJobName s. Each child job depends on every parent job within the line. A single line in the input file can specify the dependencies from one or more parents to one or more children. The diamond-shaped DAG example may specify the dependencies with

PARENT A CHILD B C

PARENT B C CHILD D

An alternative specification for the diamond-shaped DAG may specify some or all of the dependencies on separate lines:

PARENT A CHILD B C

PARENT B CHILD D

PARENT C CHILD D

As a further example, the line

PARENT p1 p2 CHILD c1 c2

produces four dependencies:

p1 to c1

p1 to c2

p2 to c1

p2 to c2

SCRIPT

The optional SCRIPT command specifies processing that is done either before a job within a node is submitted, after a job within a node completes its execution, or when a job goes on hold.

PRE and POST scripts

Processing done before a job is submitted is called a PRE script. Processing done after a job completes its execution is called a POST script. Note that the executable specified does not necessarily have to be a shell script (Unix) or batch file (Windows); but it should be relatively light weight because it will be run directly on the submit machine, not submitted as an HTCondor job.

The syntax used for each PRE or POST command is

SCRIPT [DEFER status time] PRE <JobName | ALL_NODES> ExecutableName [arguments]

SCRIPT [DEFER status time] POST <JobName | ALL_NODES> ExecutableName [arguments]

The SCRIPT command can use the PRE or POST keyword, which specifies the relative timing of when the script is to be run. The JobName identifies the node to which the script is attached. The ExecutableName specifies the executable (e.g., shell script or batch file) to be executed, and may not contain spaces. The optional arguments are command line arguments to the script, and spaces delimit the arguments. Both ExecutableName and optional arguments are case sensitive.

A PRE script is commonly used to place files in a staging area for the jobs to use. A POST script is commonly used to clean up or remove files once jobs are finished running. An example uses PRE and POST scripts to stage files that are stored on tape. The PRE script reads compressed input files from the tape drive, uncompresses them, and places the resulting files in the current directory. The HTCondor jobs can then use these files, producing output files. The POST script compresses the output files, writes them out to the tape, and then removes both the staged files and the output files.

HOLD scripts

Additionally, the SCRIPT command can take a HOLD keyword, which indicates an executable to be run when a job goes on hold. These are typically used to notify a user when something goes wrong with their jobs.

The syntax used for a HOLD command is

SCRIPT [DEFER status time] HOLD <JobName | ALL_NODES> ExecutableName [arguments]

Unlike PRE and POST scripts, HOLD scripts are not considered part of the DAG workflow and are run on a best-effort basis. If one does not complete successfully, it has no effect on the overall workflow and no error will be reported.

DEFER retries

The optional DEFER feature causes a retry of only the script, if the execution of the script exits with the exit code given by status. The retry occurs after at least time seconds, rather than being considered failed. While waiting for the retry, the script does not count against a maxpre or maxpost limit. The ordering of the DEFER feature within the SCRIPT specification is fixed. It must come directly after the SCRIPT keyword; this is done to avoid backward compatibility issues for any DAG with a JobName of DEFER.

Scripts as part of a DAG workflow

Scripts are executed on the submit machine; the submit machine is not necessarily the same machine upon which the node’s job is run. Further, a single cluster of HTCondor jobs may be spread across several machines.

If the PRE script fails, then the HTCondor job associated with the node

is not submitted, and (as of version 8.5.4) the POST script is not run

either (by default). However, if the job is submitted, and there is a

POST script, the POST script is always run once the job finishes. (The

behavior when the PRE script fails may may be changed to run the POST

script by setting configuration variable DAGMAN_ALWAYS_RUN_POST to

True or by passing the -AlwaysRunPost argument to

condor_submit_dag.)

Progress towards completion of the DAG is based upon the success of the

nodes within the DAG. The success of a node is based upon the success of

the job(s), PRE script, and POST script. A job, PRE script, or POST

script with an exit value not equal to 0 is considered failed. The

exit value of whatever component of the node was run last determines the

success or failure of the node. Table 2.1 lists

the definition of node success and failure for all variations of script

and job success and failure, when DAGMAN_ALWAYS_RUN_POST is set to

False. In this table, a dash (-) represents the case where a

script does not exist for the DAG, S represents success, and F

represents failure. Table 2.2 lists the definition of node success and

failure only for the cases where the PRE script fails, when

DAGMAN_ALWAYS_RUN_POST is set to True.

PRE |

JOB |

POST |

Node |

|---|---|---|---|

- |

S |

- |

S |

- |

F |

- |

F |

- |

S |

S |

S |

- |

S |

F |

F |

- |

F |

S |

S |

- |

F |

F |

F |

S |

S |

- |

S |

S |

F |

- |

F |

S |

S |

S |

S |

S |

S |

F |

F |

S |

F |

S |

S |

S |

F |

F |

F |

F |

not run |

- |

F |

F |

not run |

not run |

F |

Table 2.1: Node Success or Failure definition with

DAGMAN_ALWAYS_RUN_POST = False (the default).

PRE |

JOB |

POST |

Node |

|---|---|---|---|

F |

not run |

- |

F |

F |

not run |

S |

S |

F |

not run |

F |

F |

Table 2.2: Node Success or Failure definition with

DAGMAN_ALWAYS_RUN_POST = True.

Special script argument macros

The five macros $JOB, $RETRY, $MAX_RETRIES, $DAG_STATUS

and $FAILED_COUNT can be used within the DAG input file as arguments

passed to a PRE or POST script. The three macros $JOBID,

$RETURN, and $PRE_SCRIPT_RETURN can be used as arguments to POST

scripts. The use of these variables is limited to being used as an

individual command line argument to the script, surrounded by spaces,

in order to cause the substitution of the variable’s value.

The special macros are as follows:

$JOBevaluates to the (case sensitive) string defined for JobName.$RETRYevaluates to an integer value set to 0 the first time a node is run, and is incremented each time the node is retried. See Advanced Features of DAGMan for the description of how to cause nodes to be retried.$MAX_RETRIESevaluates to an integer value set to the maximum number of retries for the node. See Advanced Features of DAGMan for the description of how to cause nodes to be retried. If no retries are set for the node,$MAX_RETRIESwill be set to 0.$JOBID(for POST scripts only) evaluates to a representation of the HTCondor job ID of the node job. It is the value of the job ClassAd attributeClusterId, followed by a period, and then followed by the value of the job ClassAd attributeProcId. An example of a job ID might be 1234.0. For nodes with multiple jobs in the same cluster, theProcIdvalue is the one of the last job within the cluster.$RETURN(for POST scripts only) variable evaluates to the return value of the HTCondor job, if there is a single job within a cluster. With multiple jobs within the same cluster, there are two cases to consider. In the first case, all jobs within the cluster are successful; the value of$RETURNwill be 0, indicating success. In the second case, one or more jobs from the cluster fail. When condor_dagman sees the first terminated event for a job that failed, it assigns that job’s return value as the value of$RETURN, and it attempts to remove all remaining jobs within the cluster. Therefore, if multiple jobs in the cluster fail with different exit codes, a race condition determines which exit code gets assigned to$RETURN.A job that dies due to a signal is reported with a

$RETURNvalue representing the additive inverse of the signal number. For example, SIGKILL (signal 9) is reported as -9. A job whose batch system submission fails is reported as -1001. A job that is externally removed from the batch system queue (by something other than condor_dagman) is reported as -1002.$PRE_SCRIPT_RETURN(for POST scripts only) variable evaluates to the return value of the PRE script of a node, if there is one. If there is no PRE script, this value will be -1. If the node job was skipped because of failure of the PRE script, the value of$RETURNwill be -1004 and the value of$PRE_SCRIPT_RETURNwill be the exit value of the PRE script; the POST script can use this to see if the PRE script exited with an error condition, and assign success or failure to the node, as appropriate.$DAG_STATUSis the status of the DAG. Note that this macro’s value and definition is unrelated to the attribute namedDagStatusas defined for use in a node status file. This macro’s value is the same as the job ClassAd attributeDAG_Statusthat is defined within the condor_dagman job’s ClassAd. This macro may have the following values:0: OK

1: error; an error condition different than those listed here

2: one or more nodes in the DAG have failed

3: the DAG has been aborted by an ABORT-DAG-ON specification

4: removed; the DAG has been removed by condor_rm

5: cycle; a cycle was found in the DAG

6: halted; the DAG has been halted (see Suspending a Running DAG)

$FAILED_COUNTis defined by the number of nodes that have failed in the DAG.

Examples that use PRE or POST scripts

Examples use the diamond-shaped DAG. A first example uses a PRE script to expand a compressed file needed as input to each of the HTCondor jobs of nodes B and C. The DAG input file:

# File name: diamond.dag

JOB A A.condor

JOB B B.condor

JOB C C.condor

JOB D D.condor

SCRIPT PRE B pre.sh $JOB .gz

SCRIPT PRE C pre.sh $JOB .gz

PARENT A CHILD B C

PARENT B C CHILD D

The script pre.sh uses its command line arguments to form the file

name of the compressed file. The script contains

#!/bin/sh

gunzip ${1}${2}

Therefore, the PRE script invokes

gunzip B.gz

for node B, which uncompresses file B.gz, placing the result in file B.

A second example uses the $RETURN macro. The DAG input file contains

the POST script specification:

SCRIPT POST A stage-out job_status $RETURN

If the HTCondor job of node A exits with the value -1, the POST script is invoked as

stage-out job_status -1

The slightly different example POST script specification in the DAG input file

SCRIPT POST A stage-out job_status=$RETURN

invokes the POST script with

stage-out job_status=$RETURN

This example shows that when there is no space between the = sign

and the variable $RETURN, there is no substitution of the macro’s

value.

PRE_SKIP

The behavior of DAGMan with respect to node success or failure can be changed with the addition of a PRE_SKIP command. A PRE_SKIP line within the DAG input file uses the syntax:

PRE_SKIP <JobName | ALL_NODES> non-zero-exit-code

The PRE script of a node identified by JobName that exits with the value given by non-zero-exit-code skips the remainder of the node entirely. Neither the job associated with the node nor the POST script will be executed, and the node will be marked as successful.

Node Job Submit File Contents

Each node in a DAG may use a unique submit description file. A key limitation is that each HTCondor submit description file must submit jobs described by a single cluster number; DAGMan cannot deal with a submit description file producing multiple job clusters.

Consider again the diamond-shaped DAG example, where each node job uses the same submit description file.

# File name: diamond.dag

JOB A diamond_job.condor

JOB B diamond_job.condor

JOB C diamond_job.condor

JOB D diamond_job.condor

PARENT A CHILD B C

PARENT B C CHILD D

Here is a sample HTCondor submit description file for this DAG:

# File name: diamond_job.condor

executable = /path/diamond.exe

output = diamond.out.$(cluster)

error = diamond.err.$(cluster)

log = diamond_condor.log

universe = vanilla

queue

Since each node uses the same HTCondor submit description file, this

implies that each node within the DAG runs the same job. The

$(Cluster) macro produces unique file names for each job’s output.

The job ClassAd attribute DAGParentNodeNames is also available for

use within the submit description file. It defines a comma separated

list of each JobName which is a parent node of this job’s node. This

attribute may be used in the

arguments command for

all but scheduler universe jobs. For example, if the job has two

parents, with JobName s B and C, the submit description file command

arguments = $$([DAGParentNodeNames])

will pass the string "B,C" as the command line argument when

invoking the job.

DAGMan supports jobs with queues of multiple procs, so for example:

queue 500

will queue 500 procs as expected.

Inline Submit Descriptions

Instead of using a submit description file, you can alternatively include an

inline submit description directly inside the .dag file. An inline submit

description should be wrapped in { and } braces, with each argument

appearing on a separate line, just like the contents of a regular submit file.

Using the previous diamond-shaped DAG example, the diamond.dag file would look

like this:

# File name: diamond.dag

JOB A {

executable = /path/diamond.exe

output = diamond.out.$(cluster)

error = diamond.err.$(cluster)

log = diamond_condor.log

universe = vanilla

}

JOB B {

executable = /path/diamond.exe

output = diamond.out.$(cluster)

error = diamond.err.$(cluster)

log = diamond_condor.log

universe = vanilla

}

JOB C {

executable = /path/diamond.exe

output = diamond.out.$(cluster)

error = diamond.err.$(cluster)

log = diamond_condor.log

universe = vanilla

}

JOB D {

executable = /path/diamond.exe

output = diamond.out.$(cluster)

error = diamond.err.$(cluster)

log = diamond_condor.log

universe = vanilla

}

PARENT A CHILD B C

PARENT B C CHILD D

This can be helpful when trying to manage lots of submit descriptions, so they can all be described in the same file instead of needed to regularly shift between many files.

The main drawback of using inline submit descriptions is that they do not

support the queue statement or any variations thereof. Any job described

inline in the .dag file will only have a single instance submitted.

SUBMIT-DESCRIPTION command

In addition to declaring inline submit descriptions as part of a job, they can be declared independently of jobs using the SUBMIT-DESCRIPTION command. This can be helpful to reduce the size and readability of a .dag file when many nodes are running the same job.

A SUBMIT-DESCRIPTION can be defined using the following syntax:

SUBMIT-DESCRIPTION DescriptionName {

# submit attributes go here

}

An independently declared submit description must have a unique name that is not used by any of the jobs. It can then be linked to a job as follows:

JOB JobName DescriptionName

For example, the previous diamond.dag example could be written as follows:

# File name: diamond.dag

SUBMIT-DESCRIPTION DiamondDesc {

executable = /path/diamond.exe

output = diamond.out.$(cluster)

error = diamond.err.$(cluster)

log = diamond_condor.log

universe = vanilla

}

JOB A DiamondDesc

JOB B DiamondDesc

JOB C DiamondDesc

JOB D DiamondDesc

PARENT A CHILD B C

PARENT B C CHILD D

DAG Submission

A DAG is submitted using the tool condor_submit_dag. The manual page for condor_submit_dag details the command. The simplest of DAG submissions has the syntax

$ condor_submit_dag DAGInputFileName

and the current working directory contains the DAG input file.

The diamond-shaped DAG example may be submitted with

$ condor_submit_dag diamond.dag

Do not submit the same DAG, with same DAG input file, from within the same directory, such that more than one of this same DAG is running at the same time. It will fail in an unpredictable manner, as each instance of this same DAG will attempt to use the same file to enforce dependencies.

To increase robustness and guarantee recoverability, the

condor_dagman process is run as an HTCondor job. As such, it needs a

submit description file. condor_submit_dag generates this needed

submit description file, naming it by appending .condor.sub to the

name of the DAG input file. This submit description file may be edited

if the DAG is submitted with

$ condor_submit_dag -no_submit diamond.dag

causing condor_submit_dag to create the submit description file, but not submit condor_dagman to HTCondor. To submit the DAG, once the submit description file is edited, use

$ condor_submit diamond.dag.condor.sub

Submit machines with limited resources are supported by command line options that place limits on the submission and handling of HTCondor jobs and PRE and POST scripts. Presented here are descriptions of the command line options to condor_submit_dag. These same limits can be set in configuration. Each limit is applied within a single DAG.

DAG Throttling

Total nodes/clusters: The -maxjobs option specifies the maximum number of clusters that condor_dagman can submit at one time. Since each node corresponds to a single cluster, this limit restricts the number of nodes that can be submitted (in the HTCondor queue) at a time. It is commonly used when there is a limited amount of input file staging capacity. As a specific example, consider a case where each node represents a single HTCondor proc that requires 4 MB of input files, and the proc will run in a directory with a volume of 100 MB of free space. Using the argument -maxjobs 25 guarantees that a maximum of 25 clusters, using a maximum of 100 MB of space, will be submitted to HTCondor at one time. (See the condor_submit_dag manual page) for more information. Also see the equivalent

DAGMAN_MAX_JOBS_SUBMITTEDconfiguration option (Configuration File Entries for DAGMan).Idle procs: The number of idle procs within a given DAG can be limited with the optional command line argument -maxidle. condor_dagman will not submit any more node jobs until the number of idle procs in the DAG goes below this specified value, even if there are ready nodes in the DAG. This allows condor_dagman to submit jobs in a way that adapts to the load on the HTCondor pool at any given time. If the pool is lightly loaded, condor_dagman will end up submitting more jobs; if the pool is heavily loaded, condor_dagman will submit fewer jobs. (See the condor_submit_dag manual page for more information.) Also see the equivalent

DAGMAN_MAX_JOBS_IDLEconfiguration option (Configuration File Entries for DAGMan).Subsets of nodes: Node submission can also be throttled in a finer-grained manner by grouping nodes into categories. See section Advanced Features of DAGMan for more details.

PRE/POST scripts: Since PRE and POST scripts run on the submit machine, it may be desirable to limit the number of PRE or POST scripts running at one time. The optional -maxpre command line argument limits the number of PRE scripts that may be running at one time, and the optional -maxpost command line argument limits the number of POST scripts that may be running at one time. (See the condor_submit_dag manual page for more information.) Also see the equivalent

DAGMAN_MAX_PRE_SCRIPTSandDAGMAN_MAX_POST_SCRIPTS(Configuration File Entries for DAGMan) configuration options.

File Paths in DAGs

condor_dagman assumes that all relative paths in a DAG input file and the associated HTCondor submit description files are relative to the current working directory when condor_submit_dag is run. This works well for submitting a single DAG. It presents problems when multiple independent DAGs are submitted with a single invocation of condor_submit_dag. Each of these independent DAGs would logically be in its own directory, such that it could be run or tested independent of other DAGs. Thus, all references to files will be designed to be relative to the DAG’s own directory.

Consider an example DAG within a directory named dag1. There would

be a DAG input file, named one.dag for this example. Assume the

contents of this DAG input file specify a node job with

JOB A A.submit

Further assume that partial contents of submit description file

A.submit specify

executable = programA

input = A.input

Directory contents are

dag1/

├── A.input

├── A.submit

├── one.dag

└── programA

All file paths are correct relative to the dag1 directory.

Submission of this example DAG sets the current working directory to

dag1 and invokes condor_submit_dag:

$ cd dag1

$ condor_submit_dag one.dag

Expand this example such that there are now two independent DAGs, and

each is contained within its own directory. For simplicity, assume that

the DAG in dag2 has remarkably similar files and file naming as the

DAG in dag1. Assume that the directory contents are

parent/

├── dag1

│ ├── A.input

│ ├── A.submit

│ ├── one.dag

│ └── programA

└── dag2

├── B.input

├── B.submit

├── programB

└── two.dag

The goal is to use a single invocation of condor_submit_dag to run both dag1 and dag2. The invocation

$ cd parent

$ condor_submit_dag dag1/one.dag dag2/two.dag

does not work. Path names are now relative to parent, which is not

the desired behavior.

The solution is the -usedagdir command line argument to condor_submit_dag. This feature runs each DAG as if condor_submit_dag had been run in the directory in which the relevant DAG file exists. A working invocation is

$ cd parent

$ condor_submit_dag -usedagdir dag1/one.dag dag2/two.dag

Output files will be placed in the correct directory, and the

.dagman.out file will also be in the correct directory. A Rescue DAG

file will be written to the current working directory, which is the

directory when condor_submit_dag is invoked. The Rescue DAG should

be run from that same current working directory. The Rescue DAG includes

all the path information necessary to run each node job in the proper

directory.

Use of -usedagdir does not work in conjunction with a JOB node specification within the DAG input file using the DIR keyword. Using both will be detected and generate an error.

DAG Monitoring

After submission, the progress of the DAG can be monitored by looking at the job event log file(s) or observing the e-mail that job submission to HTCondor causes, or by using condor_q -dag.

Detailed information about a DAG’s job progress can be obtained using

$ condor_q -l <dagman-job-id>

There is also a large amount of information logged in an extra file. The

name of this extra file is produced by appending .dagman.out to the

name of the DAG input file; for example, if the DAG input file is

diamond.dag, this extra file is named diamond.dag.dagman.out. The

.dagman.out file is an important resource for

debugging; save this file if a problem occurs. The dagman.out is appended

to, rather than overwritten, with each new DAGMan run.

Editing a Running DAG

Certain properties of a running DAG can be changed after the workflow has been started. The values of these properties are published in the condor_dagman job ad; changing any of these properties using condor_qedit will also update the internal DAGMan value.

Currently, you can change the following attributes:

Attribute Name |

Attribute Description |

DAGMan_MaxJobs |

Maximum number of running jobs |

DAGMan_MaxIdle |

Maximum number of idle jobs |

DAGMan_MaxPreScripts |

Maximum number of running PRE scripts |

DAGMan_MaxPostScripts |

Maximum number of running POST scripts |

To edit one of these properties, use the condor_qedit tool with the job ID of the condor_dagman job, for example:

$ condor_qedit <dagman-job-id> DAGMan_MaxJobs 1000

To view all the properties of a condor_dagman job:

$ condor_q -l <dagman-job-id> | grep DAGMan

Removing a DAG

To remove an entire DAG, consisting of the condor_dagman job, plus any jobs submitted to HTCondor, remove the condor_dagman job by running condor_rm. For example,

$ condor_q

-- Submitter: turunmaa.cs.wisc.edu : <128.105.175.125:36165> : turunmaa.cs.wisc.edu

ID OWNER SUBMITTED RUN_TIME ST PRI SIZE CMD

9.0 taylor 10/12 11:47 0+00:01:32 R 0 8.7 condor_dagman -f -

11.0 taylor 10/12 11:48 0+00:00:00 I 0 3.6 B.out

12.0 taylor 10/12 11:48 0+00:00:00 I 0 3.6 C.out

3 jobs; 2 idle, 1 running, 0 held

$ condor_rm 9.0

When a condor_dagman job is removed, all node jobs (including sub-DAGs) of that condor_dagman will be removed by the condor_schedd. As of version 8.5.8, the default is that condor_dagman itself also removes the node jobs (to fix a race condition that could result in “orphaned” node jobs). (The condor_schedd has to remove the node jobs to deal with the case of removing a condor_dagman job that has been held.)

The previous behavior of condor_dagman itself not removing the node

jobs can be restored by setting the DAGMAN_REMOVE_NODE_JOBS

configuration macro (see

Configuration File Entries for DAGMan)

to False. This will decrease the load on the condor_schedd, at the cost of

allowing the possibility of “orphaned” node jobs.

A removed DAG will be considered failed unless the DAG has a FINAL node that succeeds.

In the case where a machine is scheduled to go down, DAGMan will clean up memory and exit. However, it will leave any submitted jobs in the HTCondor queue.

Suspending a Running DAG

It may be desired to temporarily suspend a running DAG. For example, the load may be high on the submit machine, and therefore it is desired to prevent DAGMan from submitting any more jobs until the load goes down. There are two ways to suspend (and resume) a running DAG.

Use condor_hold/condor_release on the condor_dagman job.

After placing the condor_dagman job on hold, no new node jobs will be submitted, and no PRE or POST scripts will be run. Any node jobs already in the HTCondor queue will continue undisturbed. Any running PRE or POST scripts will be killed. If the condor_dagman job is left on hold, it will remain in the HTCondor queue after all of the currently running node jobs are finished. To resume the DAG, use condor_release on the condor_dagman job.

Note that while the condor_dagman job is on hold, no updates will be made to the

dagman.outfile.Use a DAG halt file.

The second way of suspending a DAG uses the existence of a specially-named file to change the state of the DAG. When in this halted state, no PRE scripts will be run, and no node jobs will be submitted. Running node jobs will continue undisturbed. A halted DAG will still run POST scripts, and it will still update the

dagman.outfile. This differs from behavior of a DAG that is held. Furthermore, a halted DAG will not remain in the queue indefinitely; when all of the running node jobs have finished, DAGMan will create a Rescue DAG and exit.To resume a halted DAG, remove the halt file.

The specially-named file must be placed in the same directory as the DAG input file. The naming is the same as the DAG input file concatenated with the string

.halt. For example, if the DAG input file istest1.dag, thentest1.dag.haltwill be the required name of the halt file.As any DAG is first submitted with condor_submit_dag, a check is made for a halt file. If one exists, it is removed.

Note that neither condor_hold nor a DAG halt is propagated to sub-DAGs. In other words, if you condor_hold or create a halt file for a DAG that has sub-DAGs, any sub-DAGs that are already in the queue will continue to submit node jobs.

A condor_hold or DAG halt does, however, apply to splices, because they are merged into the parent DAG and controlled by a single condor_dagman instance.

Advanced Features of DAGMan

Retrying Failed Nodes

DAGMan can retry any failed node in a DAG by specifying the node in the DAG input file with the RETRY command. The use of retry is optional. The syntax for retry is

RETRY <JobName | ALL_NODES> NumberOfRetries [UNLESS-EXIT value]

where JobName identifies the node. NumberOfRetries is an integer number of times to retry the node after failure. The implied number of retries for any node is 0, the same as not having a retry line in the file. Retry is implemented on nodes, not parts of a node.

The diamond-shaped DAG example may be modified to retry node C:

# File name: diamond.dag

JOB A A.condor

JOB B B.condor

JOB C C.condor

JOB D D.condor

PARENT A CHILD B C

PARENT B C CHILD D

RETRY C 3

If node C is marked as failed for any reason, then it is started over as a first retry. The node will be tried a second and third time, if it continues to fail. If the node is marked as successful, then further retries do not occur.

Retry of a node may be short circuited using the optional keyword UNLESS-EXIT, followed by an integer exit value. If the node exits with the specified integer exit value, then no further processing will be done on the node.

The macro $RETRY evaluates to an integer value, set to 0 first time

a node is run, and is incremented each time for each time the node is

retried. The macro $MAX_RETRIES is the value set for

NumberOfRetries. These macros may be used as arguments passed to a PRE

or POST script.

Stopping the Entire DAG

The ABORT-DAG-ON command provides a way to abort the entire DAG if a given node returns a specific exit code. The syntax for ABORT-DAG-ON is

ABORT-DAG-ON <JobName | ALL_NODES> AbortExitValue [RETURN DAGReturnValue]

If the return value of the node specified by JobName matches AbortExitValue, the DAG is immediately aborted. A DAG abort differs from a node failure, in that a DAG abort causes all nodes within the DAG to be stopped immediately. This includes removing the jobs in nodes that are currently running. A node failure differs, as it would allow the DAG to continue running, until no more progress can be made due to dependencies.

The behavior differs based on the existence of PRE and/or POST scripts. If a PRE script returns the AbortExitValue value, the DAG is immediately aborted. If the HTCondor job within a node returns the AbortExitValue value, the DAG is aborted if the node has no POST script. If the POST script returns the AbortExitValue value, the DAG is aborted.

An abort overrides node retries. If a node returns the abort exit value, the DAG is aborted, even if the node has retry specified.

When a DAG aborts, by default it exits with the node return value that caused the abort. This can be changed by using the optional RETURN keyword along with specifying the desired DAGReturnValue. The DAG abort return value can be used for DAGs within DAGs, allowing an inner DAG to cause an abort of an outer DAG.

A DAG return value other than 0, 1, or 2 will cause the condor_dagman

job to stay in the queue after it exits and get retried, unless the

on_exit_remove expression in the .condor.sub file is manually

modified.

Adding ABORT-DAG-ON for node C in the diamond-shaped DAG

# File name: diamond.dag

JOB A A.condor

JOB B B.condor

JOB C C.condor

JOB D D.condor

PARENT A CHILD B C

PARENT B C CHILD D

RETRY C 3

ABORT-DAG-ON C 10 RETURN 1

causes the DAG to be aborted, if node C exits with a return value of 10. Any other currently running nodes, of which only node B is a possibility for this particular example, are stopped and removed. If this abort occurs, the return value for the DAG is 1.

Variable Values Associated with Nodes

Macros defined for DAG nodes can be used within the submit description file of the node job. The VARS command provides a method for defining a macro. Macros are defined on a per-node basis, using the syntax

VARS <JobName | ALL_NODES> [PREPEND | APPEND] macroname="string" [macroname2="string2" ... ]

The macro may be used within the submit description file of the relevant node. A macroname may contain alphanumeric characters (a-z, A-Z, and 0-9) and the underscore character. The space character delimits macros, such that there may be more than one macro defined on a single line. Multiple lines defining macros for the same node are permitted.

Correct syntax requires that the string must be enclosed in double quotes. To use a double quote mark within a string, escape the double quote mark with the backslash character (\). To add the backslash character itself, use two backslashes (\\).

A restriction is that the macroname itself cannot begin with the

string queue, in any combination of upper or lower case letters.

Examples

If the DAG input file contains

# File name: diamond.dag

JOB A A.submit

JOB B B.submit

JOB C C.submit

JOB D D.submit

VARS A state="Wisconsin"

PARENT A CHILD B C

PARENT B C CHILD D

then the submit description file A.submit may use the macro state.

Consider this submit description file A.submit:

# file name: A.submit

executable = A.exe

log = A.log

arguments = "$(state)"

queue

The macro value expands to become a command-line argument in the invocation of the job. The job is invoked with

A.exe Wisconsin

The use of macros may allow a reduction in the number of distinct submit description files. A separate example shows this intended use of VARS. In the case where the submit description file for each node varies only in file naming, macros reduce the number of submit description files to one.

This example references a single submit description file for each of the nodes in the DAG input file, and it uses the VARS entry to name files used by each job.

The relevant portion of the DAG input file appears as

JOB A theonefile.sub

JOB B theonefile.sub

JOB C theonefile.sub

VARS A filename="A"

VARS B filename="B"

VARS C filename="C"

The submit description file appears as

# submit description file called: theonefile.sub

executable = progX

output = $(filename)

error = error.$(filename)

log = $(filename).log

queue

For a DAG such as this one, but with thousands of nodes, the ability to write and maintain a single submit description file together with a single, yet more complex, DAG input file is worthwhile.

Prepend or Append Variables to Node

After JobName the word PREPEND or APPEND can be added to specify how a variable is passed to a node at job submission time. APPEND will add the variable after the submit description file is read. Resulting in the passed variable being added as a macro or overwitting any already existing variable values. PREPEND will add the variable before the submit description file is read. This allows the variable to be used in submit description file conditionals.

The relevant portion of the DAG input file appears as

JOB A theotherfile.sub

VARS A PREPEND var1="A"

VARS A APPEND var2="B"

The submit description file appears as

# submit description file called: theotherfile.sub

executable = progX

if defined var1

# This will occur due to PREPEND

Arguments = "$(var1) was prepended"

else

# This will occur due to APPEND

Arguments = "No variables prepended"

endif

var2 = "C"

output = results-$(var2).out

error = error.txt

log = job.log

queue

For a DAG such as this one, Arguments will become “A was prepended” and the

output file will be named results-B.out. If instead var1 used APPEND

and var2 used PREPEND then Arguments will become “No variables prepended”

and the output file will be named results-C.out.

If neither PREPEND nor APPEND is used in the VARS line then the variable

will either be prepended or appended based on the configuration variable

DAGMAN_DEFAULT_APPEND_VARS.

Multiple macroname definitions

If a macro name for a specific node in a DAG is defined more than once, as it would be with the partial file contents

JOB job1 job1.submit

VARS job1 a="foo"

VARS job1 a="bar"

a warning is written to the log, of the format

Warning: VAR <macroname> is already defined in job <JobName>

Discovered at file "<DAG input file name>", line <line number>

The behavior of DAGMan is such that all definitions for the macro exist,

but only the last one defined is used as the variable’s value. Using

this example, if the job1.submit submit description file contains

arguments = "$(a)"

then the argument will be bar.

Special characters within VARS string definitions

The value defined for a macro may contain spaces and tabs. It is also possible to have double quote marks and backslashes within a value. In order to have spaces or tabs within a value specified for a command line argument, use the New Syntax format for the arguments submit command, as described in condor_submit. Escapes for double quote marks depend on whether the New Syntax or Old Syntax format is used for the arguments submit command. Note that in both syntaxes, double quote marks require two levels of escaping: one level is for the parsing of the DAG input file, and the other level is for passing the resulting value through condor_submit.

As of HTCondor version 8.3.7, single quotes are permitted within the value specification. For the specification of command line arguments, single quotes can be used in three ways:

in Old Syntax, within a macro’s value specification

in New Syntax, within a macro’s value specification

in New Syntax only, to delimit an argument containing white space

There are examples of all three cases below. In New Syntax, to pass a

single quote as part of an argument, escape it with another single quote

for condor_submit parsing as in the example’s NodeA fourth macro.

As an example that shows uses of all special characters, here are only

the relevant parts of a DAG input file. Note that the NodeA value for

the macro second contains a tab.

VARS NodeA first="Alberto Contador"

VARS NodeA second="\"\"Andy Schleck\"\""

VARS NodeA third="Lance\\ Armstrong"

VARS NodeA fourth="Vincenzo ''The Shark'' Nibali"

VARS NodeA misc="!@#$%^&*()_-=+=[]{}?/"

VARS NodeB first="Lance_Armstrong"

VARS NodeB second="\\\"Andreas_Kloden\\\""

VARS NodeB third="Ivan_Basso"

VARS NodeB fourth="Bernard_'The_Badger'_Hinault"

VARS NodeB misc="!@#$%^&*()_-=+=[]{}?/"

VARS NodeC args="'Nairo Quintana' 'Chris Froome'"

Consider an example in which the submit description file for NodeA uses the New Syntax for the arguments command:

arguments = "'$(first)' '$(second)' '$(third)' '($fourth)' '$(misc)'"

The single quotes around each variable reference are only necessary if the variable value may contain spaces or tabs. The resulting values passed to the NodeA executable are:

Alberto Contador

"Andy Schleck"

Lance\ Armstrong

Vincenzo 'The Shark' Nibali

!@#$%^&*()_-=+=[]{}?/

Consider an example in which the submit description file for NodeB uses the Old Syntax for the arguments command:

arguments = $(first) $(second) $(third) $(fourth) $(misc)

The resulting values passed to the NodeB executable are:

Lance_Armstrong

"Andreas_Kloden"

Ivan_Basso

Bernard_'The_Badger'_Hinault

!@#$%^&*()_-=+=[]{}?/

Consider an example in which the submit description file for NodeC uses the New Syntax for the arguments command:

arguments = "$(args)"

The resulting values passed to the NodeC executable are:

Nairo Quintana

Chris Froome

Using special macros within a definition

The $(JOB) and $(RETRY) macros may be used within a definition of the string that defines a variable. This usage requires parentheses, such that proper macro substitution may take place when the macro’s value is only a portion of the string.

$(JOB) expands to the node JobName. If the VARS line appears in a DAG file used as a splice file, then $(JOB) will be the fully scoped name of the node.

For example, the DAG input file lines

JOB NodeC NodeC.submit VARS NodeC nodename="$(JOB)"

set

nodenametoNodeC, and the DAG input file linesJOB NodeD NodeD.submit VARS NodeD outfilename="$(JOB)-output"

set

outfilenametoNodeD-output.$(RETRY) expands to 0 the first time a node is run; the value is incremented each time the node is retried. For example:

VARS NodeE noderetry="$(RETRY)"

Using VARS to define ClassAd attributes

The macroname may also begin with a + character, in which case it

names a ClassAd attribute. For example, the VARS specification

VARS NodeF +A="\"bob\""

results in the job ClassAd attribute

A = "bob"

Note that ClassAd string values must be quoted, hence there are escaped quotes in the example above. The outer quotes are consumed in the parsing of the DAG input file, so the escaped inner quotes remain in the definition of the attribute value.

Continuing this example, it allows the HTCondor submit description file for NodeF to use the following line:

arguments = "$$([A])"

The special macros may also be used. For example

VARS NodeG +B="$(RETRY)"

places the numerical attribute

B = 1

into the ClassAd when the NodeG job is run for a second time, which is the first retry and the value 1.

Setting Priorities for Nodes

The PRIORITY command assigns a priority to a DAG node (and to the HTCondor job(s) associated with the node). The syntax for PRIORITY is

PRIORITY <JobName | ALL_NODES> PriorityValue

The priority value is an integer (which can be negative). A larger numerical priority is better. The default priority is 0.

The node priority affects the order in which nodes that are ready (all

of their parent nodes have finished successfully) at the same time will

be submitted. The node priority also sets the node job’s priority in the

queue (that is, its JobPrio attribute), which affects the order in

which jobs will be run once they are submitted (see

Job Priority for more

information). The node priority only affects the

order of job submission within a given DAG; but once jobs are submitted,

their JobPrio value affects the order in which they will be run

relative to all jobs submitted by the same user.

Sub-DAGs can have priorities, just as “regular” nodes can. (The priority of a sub-DAG will affect the priorities of its nodes: see “effective node priorities” below.) Splices cannot be assigned a priority, but individual nodes within a splice can be assigned priorities.

Note that node priority does not override the DAG dependencies. Also note that node priorities are not guarantees of the relative order in which nodes will be run, even among nodes that become ready at the same time - so node priorities should not be used as a substitute for parent/child dependencies. In other words, priorities should be used when it is preferable, but not required, that some jobs run before others. (The order in which jobs are run once they are submitted can be affected by many things other than the job’s priority; for example, whether there are machines available in the pool that match the job’s requirements.)

PRE scripts can affect the order in which jobs run, so DAGs containing PRE scripts may not submit the nodes in exact priority order, even if doing so would satisfy the DAG dependencies.

Node priority is most relevant if node submission is throttled (via the

-maxjobs or -maxidle command-line arguments or the

DAGMAN_MAX_JOBS_SUBMITTED or DAGMAN_MAX_JOBS_IDLE configuration

variables), or if there are not enough resources in the pool to

immediately run all submitted node jobs. This is often the case for DAGs

with large numbers of “sibling” nodes, or DAGs running on heavily-loaded

pools.

Example

Adding PRIORITY for node C in the diamond-shaped DAG:

# File name: diamond.dag

JOB A A.condor

JOB B B.condor

JOB C C.condor

JOB D D.condor

PARENT A CHILD B C

PARENT B C CHILD D

RETRY C 3

PRIORITY C 1

This will cause node C to be submitted (and, mostly likely, run) before node B. Without this priority setting for node C, node B would be submitted first because the “JOB” statement for node B comes earlier in the DAG file than the “JOB” statement for node C.

Effective node priorities

The “effective” priority for a node (the priority controlling the order in which nodes are actually submitted, and which is assigned to JobPrio) is the sum of the explicit priority (specified in the DAG file) and the priority of the DAG itself. DAG priorities also default to 0, so they are most relevant for sub-DAGs (although a top-level DAG can be submitted with a non-zero priority by specifying a -priority value on the condor_submit_dag command line). This algorithm for calculating effective priorities is a simplification introduced in version 8.5.7 (a node’s effective priority is no longer dependent on the priorities of its parents).

Here is an example to clarify:

# File name: priorities.dag

JOB A A.sub

SUBDAG EXTERNAL B SD.dag

PARENT A CHILD B

PRIORITY A 60

PRIORITY B 100

# File name: SD.dag

JOB SA SA.sub

JOB SB SB.sub

PARENT SA CHILD SB

PRIORITY SA 10

PRIORITY SB 20

In this example (assuming that priorities.dag is submitted with the default priority of 0), the effective priority of node A will be 60, and the effective priority of sub-DAG B will be 100. Therefore, the effective priority of node SA will be 110 and the effective priority of node SB will be 120.

The effective priorities listed above are assigned by DAGMan. There is no way to change the priority in the submit description file for a job, as DAGMan will override any priority command placed in a submit description file (unless the effective node priority is 0; in this case, any priority specified in the submit file will take effect).

Throttling Nodes by Category

In order to limit the number of submitted job clusters within a DAG, the nodes may be placed into categories by assignment of a name. Then, a maximum number of submitted clusters may be specified for each category.

The CATEGORY command assigns a category name to a DAG node. The syntax for CATEGORY is

CATEGORY <JobName | ALL_NODES> CategoryName

Category names cannot contain white space.

The MAXJOBS command limits the number of submitted job clusters on a per category basis. The syntax for MAXJOBS is

MAXJOBS CategoryName MaxJobsValue

If the number of submitted job clusters for a given category reaches the limit, no further job clusters in that category will be submitted until other job clusters within the category terminate. If MAXJOBS is not set for a defined category, then there is no limit placed on the number of submissions within that category.

Note that a single invocation of condor_submit results in one job cluster. The number of HTCondor jobs within a cluster may be greater than 1.

The configuration variable DAGMAN_MAX_JOBS_SUBMITTED and the

condor_submit_dag -maxjobs command-line option are still enforced

if these CATEGORY and MAXJOBS throttles are used.

Please see the end of Advanced Features of DAGMan on DAG Splicing for a description of the interaction between categories and splices.

Configuration Specific to a DAG

All configuration variables and their definitions that relate to DAGMan may be found in Configuration File Entries for DAGMan.

Configuration variables for condor_dagman can be specified in several ways, as given within the ordered list:

In an HTCondor configuration file.

With an environment variable. Prepend the string _CONDOR_ to the configuration variable’s name.

With a line in the DAG input file using the keyword CONFIG, such that there is a configuration file specified that is specific to an instance of condor_dagman. The configuration file specification may instead be specified on the condor_submit_dag command line using the -config option.

For some configuration variables, condor_submit_dag command line argument specifies a configuration variable. For example, the configuration variable

DAGMAN_MAX_JOBS_SUBMITTEDhas the corresponding command line argument -maxjobs.

For this ordered list, configuration values specified or parsed later in the list override ones specified earlier. For example, a value specified on the condor_submit_dag command line overrides corresponding values in any configuration file. And, a value specified in a DAGMan-specific configuration file overrides values specified in a general HTCondor configuration file.

The CONFIG command within the DAG input file specifies a configuration file to be used to set configuration variables related to condor_dagman when running this DAG. The syntax for CONFIG is

CONFIG ConfigFileName

As an example, if the DAG input file contains:

CONFIG dagman.config

then the configuration values in file dagman.config will be used for

this DAG. If the contents of file dagman.config is

DAGMAN_MAX_JOBS_IDLE = 10

then this configuration is defined for this DAG.

Only a single configuration file can be specified for a given condor_dagman run. For example, if one file is specified within a DAG input file, and a different file is specified on the condor_submit_dag command line, this is a fatal error at submit time. The same is true if different configuration files are specified in multiple DAG input files and referenced in a single condor_submit_dag command.

If multiple DAGs are run in a single condor_dagman run, the configuration options specified in the condor_dagman configuration file, if any, apply to all DAGs, even if some of the DAGs specify no configuration file.

Configuration variables that are not for condor_dagman and not utilized by DaemonCore, yet are specified in a condor_dagman-specific configuration file are ignored.

Setting ClassAd attributes in the DAG file

The SET_JOB_ATTR keyword within the DAG input file specifies an attribute/value pair to be set in the DAGMan job’s ClassAd. The syntax for SET_JOB_ATTR is

SET_JOB_ATTR AttributeName = AttributeValue

As an example, if the DAG input file contains:

SET_JOB_ATTR TestNumber = 17

the ClassAd of the DAGMan job itself will have an attribute

TestNumber with the value 17.

The attribute set by the SET_JOB_ATTR command is set only in the ClassAd of the DAGMan job itself - it is not propagated to node jobs of the DAG.

Values with spaces can be set by surrounding the string containing a space with single or double quotes. (Note that the quote marks themselves will be part of the value.)

Only a single attribute/value pair can be specified per SET_JOB_ATTR command. If the same attribute is specified multiple times in the DAG (or in multiple DAGs run by the same DAGMan instance) the last-specified value is the one that will be utilized. An attribute set in the DAG file can be overridden by specifying

-append '+<attribute> = <value>'

on the condor_submit_dag command line.

Optimization of Submission Time

condor_dagman works by watching log files for events, such as submission, termination, and going on hold. When a new job is ready to be run, it is submitted to the condor_schedd, which needs to acquire a computing resource. Acquisition requires the condor_schedd to contact the central manager and get a claim on a machine, and this claim cycle can take many minutes.

Configuration variable DAGMAN_HOLD_CLAIM_TIME

avoids the wait for a negotiation

cycle. When set to a non zero value, the condor_schedd keeps a claim

idle, such that the condor_startd delays in shifting from the Claimed

to the Preempting state (see Policy Configuration for Execute Hosts and for Submit Hosts).

Thus, if another job appears that is suitable for the claimed resource,

then the condor_schedd will submit the job directly to the

condor_startd, avoiding the wait and overhead of a negotiation cycle.

This results in a speed up of job completion, especially for linear DAGs

in pools that have lengthy negotiation cycle times.

By default, DAGMAN_HOLD_CLAIM_TIME is 20, causing a claim to remain

idle for 20 seconds, during which time a new job can be submitted

directly to the already-claimed condor_startd. A value of 0 means

that claims are not held idle for a running DAG. If a DAG node has no

children, the value of DAGMAN_HOLD_CLAIM_TIME will be ignored; the

KeepClaimIdle attribute will not be defined in the job ClassAd of

the node job, unless the job requests it using the submit command

keep_claim_idle .

Single Submission of Multiple, Independent DAGs

A single use of condor_submit_dag may execute multiple, independent DAGs. Each independent DAG has its own, distinct DAG input file. These DAG input files are command-line arguments to condor_submit_dag.

Internally, all of the independent DAGs are combined into a single, larger DAG, with no dependencies between the original independent DAGs. As a result, any generated Rescue DAG file represents all of the original independent DAGs with a single DAG. The file name of this Rescue DAG is based on the DAG input file listed first within the command-line arguments. For example, assume that three independent DAGs are submitted with

$ condor_submit_dag A.dag B.dag C.dag

The first listed is A.dag. The remainder of the specialized file

name adds a suffix onto this first DAG input file name, A.dag. The

suffix is _multi.rescue<XXX>, where <XXX> is substituted by the

3-digit number of the Rescue DAG created as defined in

The Rescue DAG section. The first

time a Rescue DAG is created for the example, it will have the file name

A.dag_multi.rescue001.

Other files such as dagman.out and the lock file also have names

based on this first DAG input file.

The success or failure of the independent DAGs is well defined. When multiple, independent DAGs are submitted with a single command, the success of the composite DAG is defined as the logical AND of the success of each independent DAG. This implies that failure is defined as the logical OR of the failure of any of the independent DAGs.

By default, DAGMan internally renames the nodes to avoid node name

collisions. If all node names are unique, the renaming of nodes may be

disabled by setting the configuration variable

DAGMAN_MUNGE_NODE_NAMES to

False (see Configuration File Entries for DAGMan).

INCLUDE

The INCLUDE command allows the contents of one DAG file to be parsed as if they were physically included in the referencing DAG file. The syntax for INCLUDE is

INCLUDE FileName

For example, if we have two DAG files like this:

# File name: foo.dag

JOB A A.sub

INCLUDE bar.dag

# File name: bar.dag

JOB B B.sub

JOB C C.sub

this is equivalent to the single DAG file:

JOB A A.sub

JOB B B.sub

JOB C C.sub

Note that the included file must be in proper DAG syntax. Also, there are many cases where a valid included DAG file will cause a parse error, such as the including and included files defining nodes with the same name.

INCLUDE s can be nested to any depth (be sure not to create a cycle of includes!).

Example: Using INCLUDE to simplify multiple similar workflows

One use of the INCLUDE command is to simplify the DAG files when we have a single workflow that we want to run on a number of data sets. In that case, we can do something like this:

# File name: workflow.dag

# Defines the structure of the workflow

JOB Split split.sub

JOB Process00 process.sub

...

JOB Process99 process.sub

JOB Combine combine.sub

PARENT Split CHILD Process00 ... Process99

PARENT Process00 ... Process99 CHILD Combine

# File name: split.sub

executable = my_split

input = $(dataset).phase1

output = $(dataset).phase2

...

# File name: data57.vars

VARS Split dataset="data57"

VARS Process00 dataset="data57"

...

VARS Process99 dataset="data57"

VARS Combine dataset="data57"

# File name: run_dataset57.dag

INCLUDE workflow.dag

INCLUDE data57.vars

Then, to run our workflow on dataset 57, we run the following command:

$ condor_submit_dag run_dataset57.dag

This avoids having to duplicate the JOB and PARENT/CHILD commands

for every dataset - we can just re-use the workflow.dag file, in

combination with a dataset-specific vars file.

Composing workflows from multiple DAG files

The organization and dependencies of the jobs within a DAG are the keys to its utility. Some workflows are naturally constructed hierarchically, such that a node within a DAG is also a DAG (instead of a “simple” HTCondor job). HTCondor DAGMan handles this situation easily, and allows DAGs to be nested to any depth.

There are two ways that DAGs can be nested within other DAGs: sub-DAGs and splices (see Advanced Features of DAGMan)

With sub-DAGs, each DAG has its own condor_dagman job, which then becomes a node job within the higher-level DAG. With splices, on the other hand, the nodes of the spliced DAG are directly incorporated into the higher-level DAG. Therefore, splices do not result in additional condor_dagman instances.

A weakness in scalability exists when submitting external sub-DAGs, because each executing independent DAG requires its own instance of condor_dagman to be running. The outer DAG has an instance of condor_dagman, and each named SUBDAG has an instance of condor_dagman while it is in the HTCondor queue. The scaling issue presents itself when a workflow contains hundreds or thousands of sub-DAGs that are queued at the same time. (In this case, the resources (especially memory) consumed by the multiple condor_dagman instances can be a problem.) Further, there may be many Rescue DAGs created if a problem occurs. (Note that the scaling issue depends only on how many sub-DAGs are queued at any given time, not the total number of sub-DAGs in a given workflow; division of a large workflow into sequential sub-DAGs can actually enhance scalability.) To alleviate these concerns, the DAGMan language introduces the concept of graph splicing.

Because splices are simpler in some ways than sub-DAGs, they are generally preferred unless certain features are needed that are only available with sub-DAGs. This document: https://htcondor-wiki.cs.wisc.edu/index.cgi/wiki?p=SubDagsVsSplices explains the pros and cons of splices and external sub-DAGs, and should help users decide which alternative is better for their application.

Note that sub-DAGs and splices can be combined in a single workflow, and can be nested to any depth (but be sure to avoid recursion, which will cause problems!).

A DAG Within a DAG Is a SUBDAG

As stated above, the SUBDAG EXTERNAL command causes the specified DAG file to be run by a separate instance of condor_dagman, with the condor_dagman job becoming a node job within the higher-level DAG.

The syntax for the SUBDAG command is

SUBDAG EXTERNAL JobName DagFileName [DIR directory] [NOOP] [DONE]

The optional specifications of DIR, NOOP, and DONE, if used, must appear in this order within the entry. NOOP and DONE for SUBDAG nodes have the same effect that they do for JOB nodes.

A SUBDAG node is essentially the same as any other node, except that the DAG input file for the inner DAG is specified, instead of the HTCondor submit file. The keyword EXTERNAL means that the SUBDAG is run within its own instance of condor_dagman.

Since more than one DAG is being discussed, here is terminology introduced to clarify which DAG is which. Reuse the example diamond-shaped DAG as given in the following description. Assume that node B of this diamond-shaped DAG will itself be a DAG. The DAG of node B is called a SUBDAG, inner DAG, or lower-level DAG. The diamond-shaped DAG is called the outer or top-level DAG.

Work on the inner DAG first. Here is a very simple linear DAG input file used as an example of the inner DAG.

# File name: inner.dag

JOB X X.submit

JOB Y Y.submit

JOB Z Z.submit

PARENT X CHILD Y

PARENT Y CHILD Z

The HTCondor submit description file, used by condor_dagman,

corresponding to inner.dag will be named inner.dag.condor.sub.

The DAGMan submit description file is always named

<DAG file name>.condor.sub. Each DAG or SUBDAG results in the

submission of condor_dagman as an HTCondor job, and

condor_submit_dag creates this submit description file.

The preferred specification of the DAG input file for the outer DAG is

# File name: diamond.dag

JOB A A.submit

SUBDAG EXTERNAL B inner.dag

JOB C C.submit

JOB D D.submit

PARENT A CHILD B C

PARENT B C CHILD D

Within the outer DAG’s input file, the SUBDAG command specifies a special case of a JOB node, where the job is itself a DAG.

One of the benefits of using the SUBDAG feature is that portions of the overall workflow can be constructed and modified during the execution of the DAG (a SUBDAG file doesn’t have to exist until just before it is submitted). A drawback can be that each SUBDAG causes its own distinct job submission of condor_dagman, leading to a larger number of jobs, together with their potential need of carefully constructed policy configuration to throttle node submission or execution (because each SUBDAG has its own throttles).

Here are details that affect SUBDAGs:

Nested DAG Submit Description File Generation

There are three ways to generate the

<DAG file name>.condor.subfile of a SUBDAG:Lazily (the default in HTCondor version 7.5.2 and later versions)

Eagerly (the default in HTCondor versions 7.4.1 through 7.5.1)

Manually (the only way prior to version HTCondor version 7.4.1)

When the

<DAG file name>.condor.subfile is generated lazily, this file is generated immediately before the SUBDAG job is submitted. Generation is accomplished by running$ condor_submit_dag -no_submit

on the DAG input file specified in the SUBDAG entry. This is the default behavior. There are advantages to this lazy mode of submit description file creation for the SUBDAG:

The DAG input file for a SUBDAG does not have to exist until the SUBDAG is ready to run, so this file can be dynamically created by earlier parts of the outer DAG or by the PRE script of the node containing the SUBDAG.

It is now possible to have SUBDAGs within splices. That is not possible with eager submit description file creation, because condor_submit_dag does not understand splices.

The main disadvantage of lazy submit file generation is that a syntax error in the DAG input file of a SUBDAG will not be discovered until the outer DAG tries to run the inner DAG.

When

<DAG file name>.condor.subfiles are generated eagerly, condor_submit_dag runs itself recursively (with the -no_submit option) on each SUBDAG, so all of the<DAG file name>.condor.subfiles are generated before the top-level DAG is actually submitted. To generate the<DAG file name>.condor.subfiles eagerly, pass the -do_recurse flag to condor_submit_dag; also set theDAGMAN_GENERATE_SUBDAG_SUBMITSconfiguration variable toFalse, so that condor_dagman does not re-run condor_submit_dag at run time thereby regenerating the submit description files.To generate the

.condor.subfiles manually, run$ condor_submit_dag -no_submit

on each lower-level DAG file, before running condor_submit_dag on the top-level DAG file; also set the

DAGMAN_GENERATE_SUBDAG_SUBMITSconfiguration variable toFalse, so that condor_dagman does not re-run condor_submit_dag at run time. The main reason for generating the<DAG file name>.condor.subfiles manually is to set options for the lower-level DAG that one would not otherwise be able to set An example of this is the -insert_sub_file option. For instance, using the given example do the following to manually generate HTCondor submit description files:$ condor_submit_dag -no_submit -insert_sub_file fragment.sub inner.dag $ condor_submit_dag diamond.dag

Note that most condor_submit_dag command-line flags have corresponding configuration variables, so we encourage the use of per-DAG configuration files, especially in the case of nested DAGs. This is the easiest way to set different options for different DAGs in an overall workflow.

It is possible to combine more than one method of generating the

<DAG file name>.condor.subfiles. For example, one might pass the -do_recurse flag to condor_submit_dag, but leave theDAGMAN_GENERATE_SUBDAG_SUBMITSconfiguration variable set to the default ofTrue. Doing this would provide the benefit of an immediate error message at submit time, if there is a syntax error in one of the inner DAG input files, but the lower-level<DAG file name>.condor.subfiles would still be regenerated before each nested DAG is submitted.The values of the following command-line flags are passed from the top-level condor_submit_dag instance to any lower-level condor_submit_dag instances. This occurs whether the lower-level submit description files are generated lazily or eagerly:

-verbose

-force

-notification

-allowlogerror

-dagman

-usedagdir

-outfile_dir

-oldrescue

-autorescue

-dorescuefrom

-allowversionmismatch

-no_recurse/do_recurse

-update_submit

-import_env

-suppress_notification

-priority

-dont_use_default_node_log

The values of the following command-line flags are preserved in any already-existing lower-level DAG submit description files:

-maxjobs

-maxidle

-maxpre

-maxpost

-debug

Other command-line arguments are set to their defaults in any lower-level invocations of condor_submit_dag.

The -force option will cause existing DAG submit description files to be overwritten without preserving any existing values.

Submission of the outer DAG

The outer DAG is submitted as before, with the command

$ condor_submit_dag diamond.dag

Interaction with Rescue DAGs

The use of new-style Rescue DAGs is now the default. With new-style rescue DAGs, the appropriate rescue DAG(s) will be run automatically if there is a failure somewhere in the workflow. For example (given the DAGs in the example at the beginning of the SUBDAG section), if one of the nodes in

inner.dagfails, this will produce a Rescue DAG forinner.dag(namedinner.dag.rescue.001). Then, sinceinner.dagfailed, node B ofdiamond.dagwill fail, producing a Rescue DAG fordiamond.dag(nameddiamond.dag.rescue.001, etc.). If the command$ condor_submit_dag diamond.dag

is re-run, the most recent outer Rescue DAG will be run, and this will re-run the inner DAG, which will in turn run the most recent inner Rescue DAG.

File Paths

Remember that, unless the DIR keyword is used in the outer DAG, the inner DAG utilizes the current working directory when the outer DAG is submitted. Therefore, all paths utilized by the inner DAG file must be specified accordingly.

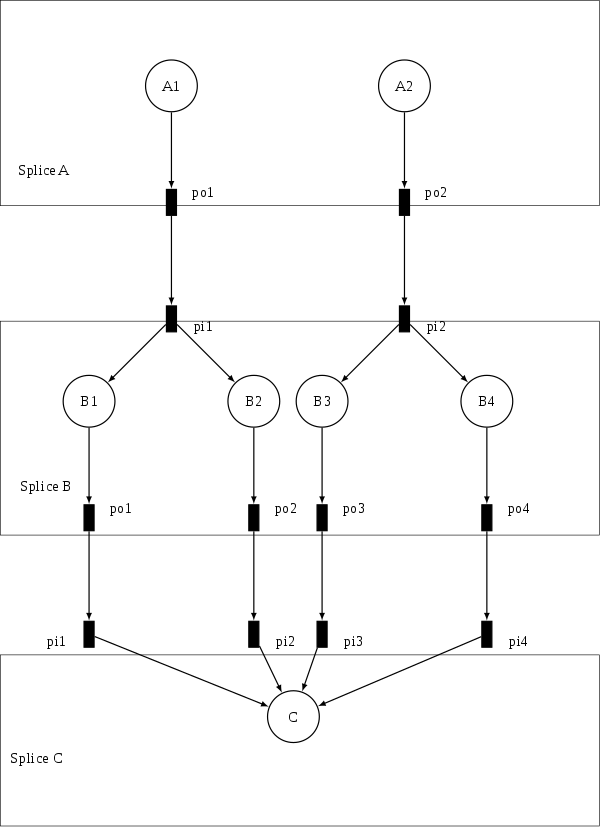

DAG Splicing

As stated above, the SPLICE command causes the nodes of the spliced DAG to be directly incorporated into the higher-level DAG (the DAG containing the SPLICE command).

The syntax for the SPLICE command is

SPLICE SpliceName DagFileName [DIR directory]

A splice is a named instance of a subgraph which is specified in a separate DAG file. The splice is treated as an entity for dependency specification in the including DAG. (Conceptually, a splice is treated as a node within the DAG containing the SPLICE command, although there are some limitations, which are discussed below. This means, for example, that splices can have parents and children.) A splice can also be incorporated into an including DAG without any dependencies; it is then considered a disjoint DAG within the including DAG.

The same DAG file can be reused as differently named splices, each one incorporating a copy of the dependency graph (and nodes therein) into the including DAG.

The nodes within a splice are scoped according to a hierarchy of names associated with the splices, as the splices are parsed from the top level DAG file. The scoping character to describe the inclusion hierarchy of nodes into the top level dag is ‘+’. (In other words, if a splice named “SpliceX” contains a node named “NodeY”, the full node name once the DAGs are parsed is “SpliceX+NodeY”. This character is chosen due to a restriction in the allowable characters which may be in a file name across the variety of platforms that HTCondor supports. In any DAG input file, all splices must have unique names, but the same splice name may be reused in different DAG input files.

HTCondor does not detect nor support splices that form a cycle within the DAG. A DAGMan job that causes a cyclic inclusion of splices will eventually exhaust available memory and crash.

The SPLICE command in a DAG input file creates a named instance of a DAG as specified in another file as an entity which may have PARENT and CHILD dependencies associated with other splice names or node names in the including DAG file.

The following series of examples illustrate potential uses of splicing. To simplify the examples, presume that each and every job uses the same, simple HTCondor submit description file:

# BEGIN SUBMIT FILE submit.condor

executable = /bin/echo

arguments = OK

universe = vanilla

output = $(jobname).out

error = $(jobname).err

log = submit.log

notification = NEVER

queue

# END SUBMIT FILE submit.condor

This first simple example splices a diamond-shaped DAG in between the two nodes of a top level DAG. Here is the DAG input file for the diamond-shaped DAG:

# BEGIN DAG FILE diamond.dag

JOB A submit.condor

VARS A jobname="$(JOB)"

JOB B submit.condor

VARS B jobname="$(JOB)"

JOB C submit.condor

VARS C jobname="$(JOB)"

JOB D submit.condor

VARS D jobname="$(JOB)"

PARENT A CHILD B C

PARENT B C CHILD D

# END DAG FILE diamond.dag

The top level DAG incorporates the diamond-shaped splice:

# BEGIN DAG FILE toplevel.dag

JOB X submit.condor

VARS X jobname="$(JOB)"

JOB Y submit.condor

VARS Y jobname="$(JOB)"

# This is an instance of diamond.dag, given the symbolic name DIAMOND

SPLICE DIAMOND diamond.dag

# Set up a relationship between the nodes in this dag and the splice

PARENT X CHILD DIAMOND

PARENT DIAMOND CHILD Y

# END DAG FILE toplevel.dag

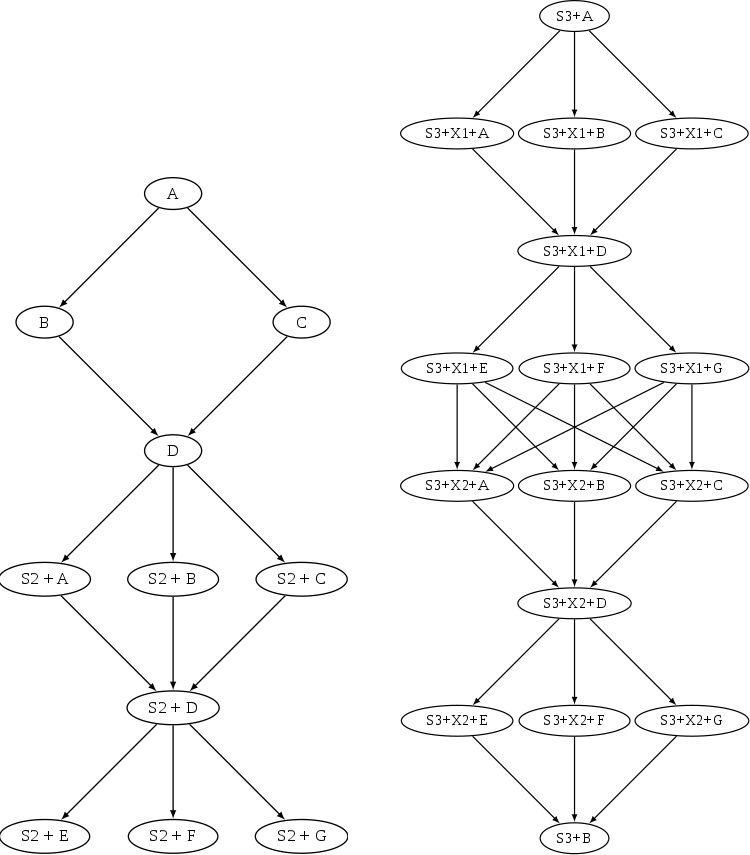

The following example illustrates the resulting top level DAG and the dependencies produced. Notice the naming of nodes scoped with the splice name. This hierarchy of splice names assures unique names associated with all nodes.

The diamond-shaped DAG spliced between two nodes.

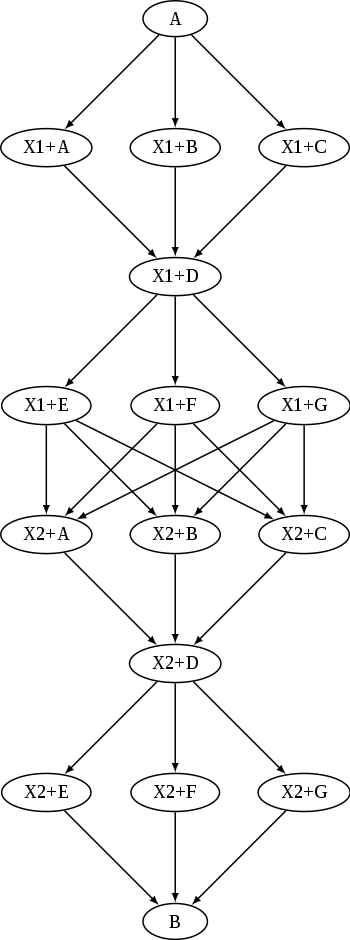

The next example illustrates the starting point for a

more complex example. The DAG input file X.dag describes this

X-shaped DAG. The completed example displays more of the spatial

constructs provided by splices. Pay particular attention to the notion

that each named splice creates a new graph, even when the same DAG input

file is specified.

# BEGIN DAG FILE X.dag

JOB A submit.condor

VARS A jobname="$(JOB)"

JOB B submit.condor

VARS B jobname="$(JOB)"

JOB C submit.condor

VARS C jobname="$(JOB)"

JOB D submit.condor

VARS D jobname="$(JOB)"

JOB E submit.condor

VARS E jobname="$(JOB)"

JOB F submit.condor

VARS F jobname="$(JOB)"

JOB G submit.condor

VARS G jobname="$(JOB)"

# Make an X-shaped dependency graph

PARENT A B C CHILD D

PARENT D CHILD E F G

# END DAG FILE X.dag

The X-shaped DAG.

File s1.dag continues the example, presenting the DAG input file

that incorporates two separate splices of the X-shaped DAG.

The next description illustrates the resulting DAG.

# BEGIN DAG FILE s1.dag

JOB A submit.condor

VARS A jobname="$(JOB)"

JOB B submit.condor

VARS B jobname="$(JOB)"

# name two individual splices of the X-shaped DAG

SPLICE X1 X.dag